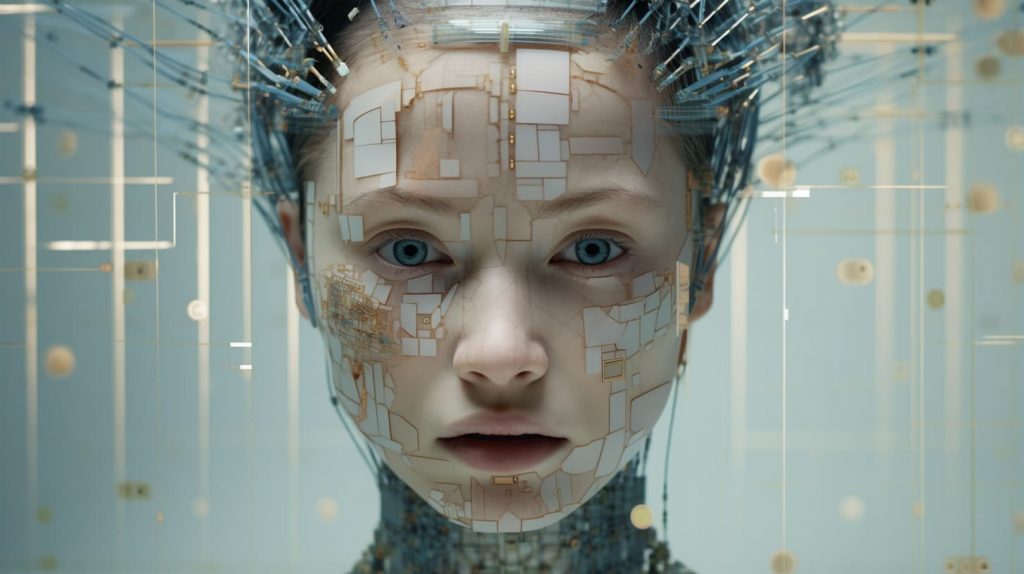

Understanding ‘Encoders’

- Encoder: At its core, an encoder is a part of a neural network designed to transform input data (like images) into a compact, abstract representation, often termed an “embedding” or “latent representation.”

- Role: The encoder’s primary role is to capture and compress the essential features of the input data, discarding unnecessary details.

Visual Encoders

- Specialization: Visual encoders are specialized for processing visual data, such as images or videos.

- Architecture: They often use architectures like Convolutional Neural Networks (CNNs) that are designed to process grid-like data and capture spatial hierarchies in images.

Training Neural Networks

- From Scratch: If you train a neural network (like a visual encoder) from scratch, it starts with random weights and learns features from the data you provide during the training process.

- Requires: Large amounts of labeled data and significant computational resources.

Pre-trained Encoders

- Definition: “Pre-trained” means that the encoder has already been trained on a large dataset before being used for a specific task.

- Benefits:

- Feature Extraction: These encoders have learned to recognize a wide range of features from the vast dataset they were trained on.

- Transfer Learning: The features and knowledge they’ve acquired can be transferred to similar tasks, especially useful if the target task has limited data.

- Saves Time: Using a pre-trained encoder can lead to faster model convergence and often better performance.

Common Datasets for Pre-training

- ImageNet: A popular dataset with millions of images spanning thousands of classes. Many pre-trained models available today were initially trained on ImageNet.

- COCO: Another dataset, often used for tasks like object detection and image segmentation.

Types of Pre-trained Visual Encoders

- CNN-based Encoders: Models like VGG, ResNet, and Inception are classic examples of CNN architectures often available as pre-trained encoders.

- Transformer-based Encoders: With the success of transformers in NLP, architectures like the Vision Transformer (ViT) have emerged, applying transformer mechanisms to visual data.

Fine-tuning & Transfer Learning

- Adapting to Specific Tasks: While the pre-trained encoder knows generic features from its original dataset, it can be further trained (or fine-tuned) on a smaller, task-specific dataset to adapt its knowledge.

- Example: A visual encoder pre-trained on ImageNet can be fine-tuned on medical images to detect specific conditions.

Applications

- Image Classification: Categorizing entire images into classes.

- Object Detection: Recognizing multiple objects in images and pinpointing their locations.

- Image Segmentation: Classifying individual pixels in images.

- Embeddings: Generating compact representations for tasks like image retrieval.

Challenges & Considerations

- Data Mismatch: If the pre-trained dataset is very different from the target task’s data, transfer learning might not be as effective.

- Computational Costs: While pre-trained models save training time, they can be large and demand significant resources during inference.

Conclusion

Pre-trained visual encoders leverage prior knowledge from extensive datasets to offer a head start when tackling new visual tasks. They encapsulate a form of “transfer learning,” allowing models to transfer knowledge from one domain to another, providing a foundation that can be built upon for specific applications.